The Risks of Relying on Older OpenAI API Versions in the Wake of GPT-5's Launch

On August 7, 2025, OpenAI unveiled GPT-5, its most advanced AI model to date, marking a significant leap in capabilities like reasoning, coding, and factual accuracy. This release, now rolling out across ChatGPT, the API, and enterprise tools, promises enhanced performance with fewer errors—boasting around 80% fewer factual mistakes than prior models on benchmarks like LongFact and FactScore. However, for developers and businesses who have built applications around previous ChatGPT versions via OpenAI's API (such as GPT-4, GPT-4o, or GPT-3.5), this launch introduces several potential pitfalls. From abrupt deprecations to compatibility headaches and heightened security concerns, the transition isn't seamless. Drawing from recent expert insights and industry discussions, this post explores these risks and why proactive migration planning is essential.

1. Model Deprecation and Sudden Loss of Access

One of the most immediate risks is OpenAI's decision to deprecate older models, potentially leaving integrations in limbo. Following the GPT-5 rollout, OpenAI has begun pulling access to legacy models like GPT-4o, GPT-4.1, and others in the ChatGPT interface, with API deprecations likely to follow. This has already caused disruptions: some businesses reported losing access to custom workflows for up to a week, forcing hasty switches to GPT-5.

AI expert and former OpenAI founding member Andrej Karpathy highlighted similar issues in past transitions, noting that deprecations can catch developers off-guard. In a discussion on API changes, he warned: "I should clarify that the risk is highest if you're running local LLM agents (e.g. Cursor, Claude Code, etc.). If you're just talking to an LLM on a website (e.g. ChatGPT), the risk is much lower *unless* you start turning on Connectors." Karpathy's point underscores how even non-API users could face indirect fallout if they rely on integrated features.

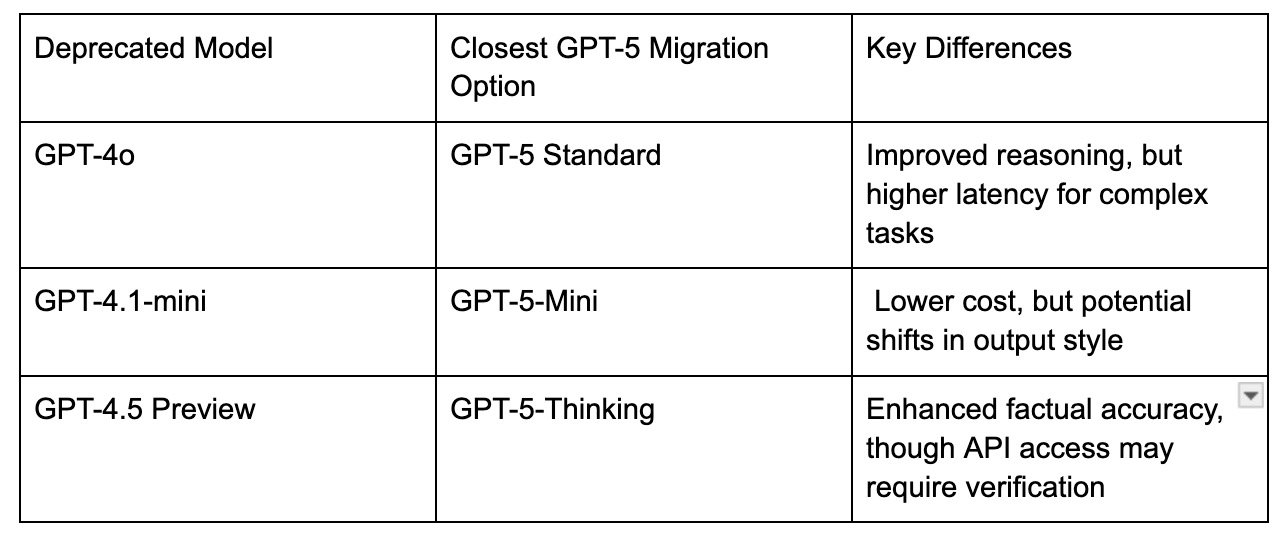

Developer Himanshu Sharma echoed this in a recent post, advising: "With the advent of GPT-5, OpenAI has officially deprecated many of its earlier models (like GPT-4o, GPT-4.1, GPT-4.5, GPT-4.1-mini, o4-mini etc). If your workflows rely on these older models, they might stop working." He recommended testing on GPT-5 variants and preparing fallback logic, as existing chats or apps may auto-switch, altering outputs unexpectedly

This table, inspired by Sharma's analysis, illustrates migration paths but highlights the risk of inconsistent behavior during the shift.

2. Compatibility Issues and Workflow Disruptions

Even if older APIs remain temporarily available, GPT-5's architectural changes could break compatibility in subtle ways. The new model prioritizes "safe completions" over outright refusals for risky queries, which might alter response patterns in applications built for stricter older versions. Developers have reported quirks, such as streaming errors or invalid API calls when using deprecated schemas.

Petri Kuittinen, a programmer, vented frustration over API breakage: "It gets even worse. Previously you could stream the responses with ANY OpenAI model. Now API keys me errors if I try to stream with 'gpt-5'. I need to verify my organization to do that!" Such issues could cascade in production environments, especially for real-time applications like chatbots or code generators.

OpenAI's own developer community forums are abuzz with complaints, like one user stating: "I've been using OpenAI API models for over 3 years now, but this doesn't make any sense." The risk here is not just technical—it's operational, as teams scramble to refactor code amid tight deadlines.

3. Escalating Costs and Resource Demands

GPT-5's power comes at a price. API usage is billed at $1.25 per 1M input tokens and $10 per 1M output tokens—potentially a steep hike for high-volume users accustomed to cheaper legacy models. For enterprises scaling AI integrations, this could inflate budgets unexpectedly, especially if deprecations force immediate upgrades

Theo, a developer and CEO at t3dotchat, critiqued OpenAI's pricing inconsistencies: "It's genuinely weird that the same company has the best value option (o4-mini) and also the worst value option (o1-pro). We're talking about a >100x price increase for a WORSE model." While referring to prior models, his sentiment applies here: GPT-5's premium tier might not justify the cost for all use cases, risking financial strain for smaller devs.

4. Heightened Security and Ethical Risks

GPT-5 introduces advanced agentic features, but these amplify vulnerabilities like prompt injection and data leakage. OpenAI has added mitigations, yet experts warn of persistent dangers. For instance, integrating connectors or agents could expose sensitive data, as Karpathy noted: "imagine ChatGPT telling everything it knows about you to some attacker on the internet just because you checked the wrong box in the Connectors settings."

Recent incidents highlight this: OpenAI revoked API access for a developer who built a weaponized turret using the Realtime API, citing policy violations. Rohan Paul, an AI analyst, commented: "This incident amplifies concerns about AI’s potential role in automating military-grade systems... Combined with advances in 3D-printed weapon parts, DIY autonomous systems could become an escalating security risk."

Manipulation via hidden text in searches is another worry, as media entrepreneur Mario Nawfal reported: "OpenAI's new search feature can be manipulated by websites using invisible text, potentially endangering users with fake product reviews and malicious code." A crypto hack via compromised code cost one user $2,500, underscoring the need for verification.

Jason Stapleton, a tech commentator, added: "Using on 'ChatGPT Agent' is NOT safe for most tasks OpenAI warns against using it for any kind of transaction due to data risks."

Mitigating the Risks: Steps Forward

While GPT-5's launch heralds exciting advancements, the risks for legacy API users are real and multifaceted. To navigate this:

Audit and Test: Review dependencies on older models and test migrations to GPT-5 variants.

Budget for Changes: Factor in new API costs and potential downtime.

Enhance Security: Implement safeguards against prompt injection and limit connector usage.

Stay Informed: Monitor OpenAI's developer forums and announcements for deprecation timelines.

As Sharma advises, "start preparing fallback logic" now. The era of GPT-5 is here, but clinging to the past could prove costly—embrace the upgrade thoughtfully to avoid getting left behind.